In response to my review of his book on the life of Jim Angleton, Michael Holzman has written a thoughtful post on the particular challenges of writing histories of secret intelligence organizations:

Histories of the activities of secret intelligence organizations form a specialized branch of historical research, similar, in many ways, to military and political history, dissimilar in other ways. They are similar in that the object of study is almost always a governmental institution and like the Army, for example, a secret intelligence organization may produce its own public and private histories and cooperate or not cooperate with outside historians. They are dissimilar due to the unusual nature of secret intelligence organizations.

The diplomatic historian has at his or her disposal the vast, rich and often astonishingly frank archives of diplomacy, such as the Foreign Relations of the United States (FRUS). Needless to say, there is no publication series entitled the Secret Foreign Operations of the United States (or any other country). What we have instead is something like an archeological site, a site not well-preserved or well-protected, littered with fake artifacts, much missing, much mixed together and all difficult to put in context.

The overwhelming majority of publications about secret intelligence are produced by secret intelligence services as part of their operations, whether purportedly written by “retired” members of those services, by those “close to” such services, by writers commissioned, directly or through third or fourth parties, by such services. There are very few independent researchers working in the field. The most distinguished practitioners, British academics, for example, have dual appointments—university chairs and status as “the historian” of secret intelligence agencies. There are, of course, muckrakers, some of whom have achieved high status among the cognoscenti, but they are muckrakers nonetheless and as such exhibit the professional deformations of their trade, chiefly, a certain obscurity of sourcing and lack of balance in judgment.

Thus, an academically trained researcher, taking an interest in this field, finds challenges unknown elsewhere. The archives are non-existent, “weeded,” or faked; the “literature” is tendentious to a degree not found otherwise outside of obscure religious sects; common knowledge, including fundamental matters of relative importance of persons and events, is at the very least unreliable, and research methods are themselves most peculiar. Concerning the latter, the privileged mode is the interview with secret intelligence officials, retired secret intelligence officials, spies and so forth. Authors and researchers will carefully enumerate how many interviews they held, sometimes for attribution, more often not, the latter instances apparently more valued than the former. This is an unusual practice, not that researchers do not routinely interview those thought to be knowledgeable about the subject at hand, but because these particular interviewees are known to be, by definition, unreliable witnesses. Many are themselves trained interrogators; most are accustomed to viewing their own speech as an instrument for specific operational purposes; nearly all have signed security pledges. The methodological difficulties confronting the researcher seem to allow only a single use for the products of these interviews: the statement that the interviewee on this occasion said this or that, quite without any meaningful application of the statements made.

An additional, unusual, barrier to research is the reaction of the ensemble of voices from the secret intelligence world to published research not emanating from that world or emanating from particular zones not favored by certain voices. Work that can be traced to other intelligence services is discredited for that reason; work from non-intelligence sources is discredited for that reason (“professor so-and-so is unknown to experienced intelligence professionals”); certain topics are off-limits and, curiously, certain topic are de rigeur (“The writer has not mentioned the notorious case y”). And, finally, there is the scattershot of minutiae always on hand for the purpose—dates (down to the day of the week), spelling (often transliterated by changing convention), names of secret intelligence agencies and their abbreviations (“Surely the writer realizes that before 19__ the agency in question was known as XXX”). All this intended to drown out dissident ideas or, more importantly, inconvenient facts, non-received opinions.

What is to be done? One suggestion would be that of scholarly modesty. The scholar would be well-advised to accept at the beginning that much will never be available. Consider the ULTRA secret—the fact that the British were able to read a variety of high-grade German ciphers during the Second World War. This was known, in one way or another, to hundreds, if not thousands, of people, and yet remained secret for most of a generation. Are we sure that there is no other matter, as significant, not only to the history of secret intelligence, but to general history, that is not yet known? Secondly, that which does become available must be treated with extraordinary caution in two ways: is it what it purports to be, and how does it fit into a more general context? To point at two highly controversial matters, there is VENONA, the decryptions and interpretations of certain Soviet diplomatic message traffic, and, on a different register, the matter of conspiracy theories. Just to approach the prickly pear of the latter, the term itself was invented by James Angleton, chief of the CIA counterintelligence staff, as a way for discouraging questions of the conclusions of the Warren Commission. It lives on, an undead barrier to the understanding of many incidents of the Cold War. The VENONA material is available only in a form edited and annotated by American secret intelligence. There are, for example, footnotes assigning certain cover names to certain well-known persons, but no reasons are given for these attributions. The original documents have not been made available to researchers, nor the stages of decryption and interpretation. And yet great castles of interpretation have been constructed on these foundations.

Intelligence materials can be used, indeed, if available, must be used, if we are to understand certain historical situations: the coup d’etats in Iran, Guatemala and Chile, for example. The FRUS itself incorporates secret intelligence materials in its account of the Guatemala matter. But such materials can only be illustrative; the case itself must be made from open sources. There are exceptions: Nazi-era German intelligence records were captured and are now available nearly in their entirety; occasional congressional investigations have obtained substantial amounts of the files of American secret intelligence agencies; other materials become misplaced into the public realm. But this is a diminuendo of research excellence. The historian concerned with secret intelligence matters must face the unpleasant reality that little can be known about such matters and, from the point of view of the reader, the more certainty with which interpretations are asserted, the more likely it is that such interpretations are yet another secret intelligence operation.

— Michael Holzman

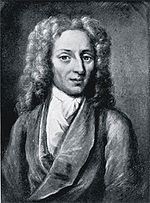

Nicolas Fatio de Duillier (1664-1753) was a Genevan mathematician and polymath, who for a time in the 1680s and 1690s, was a close friend of Isaac Newton. After coming to London in 1687, he became a

Nicolas Fatio de Duillier (1664-1753) was a Genevan mathematician and polymath, who for a time in the 1680s and 1690s, was a close friend of Isaac Newton. After coming to London in 1687, he became a