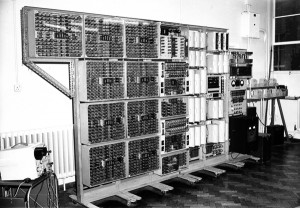

Yesterday, I reported on the restoration of the world’s oldest, still-working modern computer. Last night, British Prime Minister Gordon Brown apologized for the country’s treatment of Alan Turing, computer pioneer. In the words of Brown’s statement:

Turing was a quite brilliant mathematician, most famous for his work on breaking the German Enigma codes. It is no exaggeration to say that, without his outstanding contribution, the history of World War Two could well have been very different. He truly was one of those individuals we can point to whose unique contribution helped to turn the tide of war. The debt of gratitude he is owed makes it all the more horrifying, therefore, that he was treated so inhumanely. In 1952, he was convicted of ‘gross indecency’ – in effect, tried for being gay. His sentence – and he was faced with the miserable choice of this or prison – was chemical castration by a series of injections of female hormones. He took his own life just two years later.”

It might be considered that this apology required no courage of Brown.

This is not the case. Until very recently, and perhaps still today, there were people who disparaged and belittled Turing’s contribution to computer science and computer engineering. The conventional academic wisdom is that he was only good at the abstract theory and at the formal mathematizing (as in his “schoolboy essay” proposing a test to distinguish human from machine interlocuters), and not good for anything practical. This belief is false. As the philosopher and historian B. Jack Copeland has shown, Turing was actively and intimately involved in the design and construction work (mechanical & electrical) of creating the machines developed at Bletchley Park during WWII, the computing machines which enabled Britain to crack the communications codes used by the Germans.

Perhaps, like myself, you imagine this revision to conventional wisdom would be uncontroversial. Sadly, not. On 5 June 2004, I attended a symposium in Cottonopolis to commemorate the 50th anniversary of Turing’s death. At this symposium, Copeland played a recording of an oral-history interview with engineer Tom Kilburn (1921-2001), first head of the first Department of Computer Science in Britain (at the University of Manchester), and also one of the pioneers of modern computing. Kilburn and Turing had worked together in Manchester after WW II. The audience heard Kilburn stress to his interviewer that what he learnt from Turing about the design and creation of computers was all high-level (ie, abstract) and not very much, indeed only about 30 minutes worth of conversation. Copeland then produced evidence (from signing-in books) that Kilburn had attended a restricted, invitation-only, multi-week, full-time course on the design and engineering of computers which Turing had presented at the National Physical Laboratories shortly after the end of WW II, a course organized by the British Ministry of Defence to share some of the learnings of the Bletchley Park people in designing, building and operating computers. If Turing had so little of practical relevance to contribute to Kilburn’s work, why then, one wonders, would Kilburn have turned up each day to his course.

That these issues were still fresh in the minds of some people was shown by the Q&A session at the end of Copeland’s presentation. Several elderly members of the audience, clearly supporters of Kilburn, took strident and emotive issue with Copeland’s argument, with one of them even claiming that Turing had contributed nothing to the development of computing. I repeat: this took place in Manchester 50 years after Turing’s death! Clearly there were people who did not like Turing, or in some way had been offended by him, and who were still extremely upset about it half a century later. They were still trying to belittle his contribution and his practical skills, despite the factual evidence to the contrary.

I applaud Gordon Brown’s courage in officially apologizing to Alan Turing, an apology which at least ensures the historical record is set straight for what our modern society owes this man.

POSTSCRIPT #1 (2009-10-01): The year 2012 will be a centenary year of celebration of Alan Turing.

POSTSCRIPT #2 (2011-11-18): It should also be noted, concerning Mr Brown’s statement, that Turing died from eating an apple laced with cyanide. He was apparently in the habit of eating an apple each day. These two facts are not, by themselves, sufficient evidence to support a claim that he took his own life.

POSTSCRIPT #3 (2013-02-15): I am not the only person to have questioned the coroner’s official verdict that Turing committed suicide. The BBC reports that Jack Copeland notes that the police never actually tested the apple found beside Turing’s body for traces of cyanide, so it is quite possible it had no traces. The possibility remains that he died from an accidental inhalation of cyanide or that he was deliberately poisoned. Given the evidence, the only rational verdict is an open one.