Brian Dillon reviews a British touring exhibition of the art of John Cage, currently at the Baltic Mill Gateshead.

Two quibbles: First, someone who compare’s Cage’s 4′ 33” to a blank gallery wall hasn’t actually listened to the piece. If Dillon had compared it to a glass window in the gallery wall allowing a view of the outside of the gallery, then he would have made some sense. But Cage’s composition is not about silence, or even pure sound, for either of which a blank gallery wall might be an appropriate visual representation. The composition is about ambient sound, and about what sounds count as music in our culture.

Second, Dillon rightly mentions that the procedures used by Cage for musical composition from 1950 onwards (and later for poetry and visual art) were based on the Taoist I Ching. But he wrongly describes these procedures as being based on “the philosophy of chance.” Although widespread, this view is nonsense, accurate neither as to what Cage was doing, nor even as to what he may have thought he was doing. Anyone subscribing to the Taoist philosophy underlying them understands the I Ching procedures as examplifying and manifesting hidden causal mechanisms, not chance. The point of the underlying philosophy is that the random-looking events that result from the procedures express something unique, time-dependent, and personal to the specific person invoking the I Ching at the particular time they invoke it. So, to a Taoist, the resulting music or art is not “chance” or “random” or “aleatoric” at all, but profoundly deterministic, being the necessary consequential expression of deep, synchronistic, spiritual forces. I don’t know if Cage was himself a Taoist (I’m not sure that anyone does), but to an adherent of Taoist philosophy Cage’s own beliefs or attitudes are irrelevant to the workings of these forces. I sense that Cage had sufficient understanding of Taoist and Zen ideas (Zen being the Japanese version of Taoism) to recognize this particular feature: that to an adherent of the philosophy the beliefs of the invoker of the procedures are irrelevant.

In my experience, the idea that the I Ching is a deterministic process is a hard one for many modern westerners to understand, let alone to accept, so entrenched is the prevailing western view that the material realm is all there is. This entrenched view is only historically recent in the west: Isaac Newton, for example, was a believer in the existence of cosmic spiritual forces, and thought he had found the laws which governed their operation. Obversely, many easterners in my experience have difficulty with notions of uncertainty and chance; if all events are subject to hidden causal forces, the concepts of randomness and of alternative possible futures make no sense. My experience here includes making presentations and leading discussions on scenario analyses with senior managers of Asian multinationals.

We are two birds swimming, each circling the pond, warily, neither understanding the other, neither flying away.

References:

Kyle Gann [2010]: No Such Thing as Silence. John Cage’s 4′ 33”. New Haven, CT, USA: Yale University Press.

James Pritchett [1993]: The Music of John Cage. Cambridge, UK: Cambridge University Press.

Monthly Archives: July 2010

Page 2 of 2

Bayesian statistics

One of the mysteries to anyone trained in the frequentist hypothesis-testing paradigm of statistics, as I was, and still adhering to it, as I do, is how Bayesian approaches seemed to have taken the academy by storm. One wonders, first, how a theory based – and based explicitly – on a measure of uncertainty defined in terms of subjective personal beliefs, could be considered even for a moment for an inter-subjective (ie, social) activity such as Science.

One wonders, second, how a theory justified by appeals to such socially-constructed, culturally-specific, and readily-contestable activities as gambling (ie, so-called Dutch-book arguments) could be taken seriously as the basis for an activity (Science) aiming for, and claiming to achieve, universal validity. One wonders, third, how the fact that such justifications, even if gambling presents no moral, philosophical or other qualms, require infinite sequences of gambles is not a little troubling for all of us living in this finite world. (You tell me you are certain to beat me if we play an infinite sequence of gambles? Then, let me tell you, that I have a religion promising eternal life that may interest you in turn.)

One wonders, fourthly, where are recorded all the prior distributions of beliefs which this theory requires investigators to articulate before doing research. Surely someone must be writing them down, so that we consumers of science can know that our researchers are honest, and hold them to potential account. That there is such a disconnect between what Bayesian theorists say researchers do and what those researchers demonstrably do should trouble anyone contemplating a choice of statistical paradigms, surely. Finally, one wonders how a theory that requires non-zero probabilities be allocated to models of which the investigators have not yet heard or even which no one has yet articulated, for those models to be tested, passes muster at the statistical methodology corral.

To my mind, Bayesianism is a theory from some other world – infinite gambles, imagined prior distributions, models that disregard time or requirements for constructability, unrealistic abstractions from actual scientific practice – not from our own.

So, how could the Bayesians make as much headway as they have these last six decades? Perhaps it is due to an inherent pragmatism of statisticians – using whatever techniques work, without much regard as to their underlying philosophy or incoherence therein. Or perhaps the battle between the two schools of thought has simply been asymmetric: the Bayesians being more determined to prevail (in my personal experience, to the point of cultism and personal vitriol) than the adherents of frequentism. Greg Wilson’s 2001 PhD thesis explored this question, although without finding definitive answers.

Now, Andrew Gelman and the indefatigable Cosma Shalizi have written a superb paper, entitled “Philosophy and the practice of Bayesian statistics”. Their paper presents another possible reason for the rise of Bayesian methods: that Bayesianism, when used in actual practice, is most often a form of hypothesis-testing, and thus not as untethered to reality as the pure theory would suggest. Their abstract:

A substantial school in the philosophy of science identifies Bayesian inference with inductive inference and even rationality as such, and seems to be strengthened by the rise and practical success of Bayesian statistics. We argue that the most successful forms of Bayesian statistics do not actually support that particular philosophy but rather accord much better with sophisticated forms of hypothetico-deductivism. We examine the actual role played by prior distributions in Bayesian models, and the crucial aspects of model checking and model revision, which fall outside the scope of Bayesian confirmation theory. We draw on the literature on the consistency of Bayesian updating and also on our experience of applied work in social science.

Clarity about these matters should benefit not just philosophy of science, but also statistical practice. At best, the inductivist view has encouraged researchers to fit and compare models without checking them; at worst, theorists have actively discouraged practitioners from performing model checking because it does not fit into their framework.

References:

Andrew Gelman and Cosma Rohilla Shalizi [2010]: Philosophy and the practice of Bayesian statistics. Available from Arxiv. Blog post here.

Mass customization of economic laws

Belatedly, I have just seen a column by John Kay in the FT of 13 April 2010 (subscribers only), entitled: “Economics may be dismal, but it is not a science.” His column reminded me of Stephen Toulmin’s arguments in his book Cosmopolis about the universalizing tendencies of modern western culture these last four centuries, which I discussed here.

An excerpt from Kay’s column:

Both the efficient market hypothesis and DSGE [Dynamic Stochastic General Equilibrium models] are associated with the idea of rational expectations – which might be described as the idea that households and companies make economic decisions as if they had available to them all the information about the world that might be available. If you wonder why such an implausible notion has won wide acceptance, part of the explanation lies in its conservative implications. Under rational expectations, not only do firms and households know already as much as policymakers, but they also anticipate what the government itself will do, so the best thing government can do is to remain predictable. Most economic policy is futile.

So is most interference in free markets. There is no room for the notion that people bought subprime mortgages or securitised products based on them because they knew less than the people who sold them. When the men and women of Goldman Sachs perform “God’s work”, the profits they make come not from information advantages, but from the value of their services. The economic role of government is to keep markets working.

These theories have appeal beyond the ranks of the rich and conservative for a deeper reason. If there were a simple, single, universal theory of economic behaviour, then the suite of arguments comprising rational expectations, efficient markets and DSEG would be that theory. Any other way of describing the world would have to recognise that what people do depends on their fallible beliefs and perceptions, would have to acknowledge uncertainty, and would accommodate the dependence of actions on changing social and cultural norms. Models could not then be universal: they would have to be specific to contexts.

The standard approach has the appearance of science in its ability to generate clear predictions from a small number of axioms. But only the appearance, since these predictions are mostly false. The environment actually faced by investors and economic policymakers is one in which actions do depend on beliefs and perceptions, must deal with uncertainty and are the product of a social context. There is no universal economic theory, and new economic thinking must necessarily be eclectic. That insight is Keynes’s greatest legacy.

The cultures of mathematics education

I posted recently about the macho culture of pure mathematics, and the undue focus that school mathematics education has on problem-solving and competitive games.

I have just encountered an undated essay, “The Two Cultures of Mathematics”, by Fields Medallist Timothy Gowers, currently Rouse Ball Professor of Mathematics at Cambridge.

Gowers identifies two broad types of research pure mathematicians: problem-solvers and theory-builders. He cites Paul Erdos as an example of the former (as I did in my earlier post), and Michael Atiyah as an example of the latter. What I find interesting is that Gowers believes that the profession as a whole currently favours theory-builders over problem-solvers. And domains of mathematics where theory-building is currently more important (such as Geometry and Algebraic Topology) are favoured over domains of mathematics where problem-solving is currently more important (such as Combinatorics and Graph Theory).

I agree with Gowers here, and wonder, then, why the teaching of mathematics at school still predominantly favours problem-solving over theory-building activities, despite a century of Hilbertian and Bourbakian axiomatics. Is it because problem-solving was the predominant mode of British mathematics in the 19th century (under the pernicious influence of the Cambridge Mathematics Tripos, which retarded pure mathematics in the Anglophone world for a century) and school educators are slow to catch-on with later trends? Or, is it because the people designing and implementing school mathematics curricula are people out of sympathy with, and/or not competent at, theory-building?

Certainly, if your over-riding mantra for school education is instrumental relevance than the teaching of abstract mathematical theories may be hard to justify (as indeed is the teaching of music or art or ancient Greek).

This perhaps explains how I could learn lots of tricks for elementary arithmetic in day-time classes at primary school, but only discover the rigorous beauty of Euclid’s geometry in special after-school lessons from a sympathetic fifth-grade teacher (Frank Torpie).

History under circumstances not of our choosing

British MP Rory Stewart writing this week about western military policy towards Afghanistan:

We can do other things for Afghanistan but the West – in particular its armies, development agencies and diplomats – are not as powerful, knowledgeable or popular as we pretend. Our officials cannot hope to predict and control the intricate allegiances and loyalties of Afghan communities or the Afghan approach to government. But to acknowledge these limits and their implications would require not so much an anthropology of Afghanistan, but an anthropology of ourselves.

The cures for our predicament do not lie in increasingly detailed adjustments to our current strategy. The solution is to remind ourselves that politics cannot be reduced to a general scientific theory, that we must recognize the will of other peoples and acknowledge our own limits. Most importantly, we must remind our leaders that they always have a choice.

That is not how it feels. European countries feel trapped by their relationship with NATO and the United States. Holbrooke and Obama feel trapped by the position of American generals. And everyone – politicians, generals, diplomats and journalist – feels trapped by our grand theories and beset by the guilt of having already lost over a thousand NATO lives, spent a hundred billion dollars and made a number of promises to Afghans and the West which we are unlikely to be able to keep.

So powerful are these cultural assumptions, these historical and economic forces and these psychological tendencies, that even if every world leader privately concluded the operation was unlikely to succeed, it is almost impossible to imagine the US or its allies halting the counter-insurgency in Afghanistan in the years to come. Roman Emperor Frederick Barbarossa may have been in a similar position during the Third Crusade. Former US President Lyndon B. Johnson certainly was in 1963. Europe is simply in Afghanistan because America is there. America is there just because it is. And all our policy debates are scholastic dialectics to justify this singular but not entirely comprehensible fact.

Postcards to the future

Designer & blogger Russell Davies has an interesting post about sharing, but he is mistaken about books. He says:

A mixtape is more valuable gift than a spotify playlist because of that embedded value, because everyone knows how much work they are, of the care you have to take, because there is only one. If it gets lost it’s lost. Sharing physical goods is psychically harder than sharing information because goods are more valuable. And, therefore, presumably, the satisfactions of sharing them are greater. I bet there’s some sort of neurological/evolutionary trick in there, physical things will always feel more valuable to us because that’s what we’re used to, that’s what engages our senses. Even though ebooks are massively more convenient, usable and useful than paper ones, that lack of embodiedness nags away at us – telling us that this thing’s not real, not proper, not of value. (And maybe we don’t have the same effect with music because we’re less used to having music engage so many of our senses. It’s pretty unembodied anyway.)

No, it’s not that we value physical objects like books because we are used to doing so, nor (a really silly idea, this) because of some form of long-range evolutionary determinism. (If our pre-literate ancestors only valued physical objects, why did they paint art on cave walls?) No, we value books because they are a tangible reminder to us of the feelings we had while reading them, a souvenir postcard sent from our past self to our future self.

ebooks are only more convenient, usable and useful than paper ones if your purpose to read from start to finish, or to dip into them here and there. If instead, as is usually the case with mathematics books, you need to read some chapters or sections while continually referring back to earlier ones (for example, the chapters with definitions), then a physical book and multiple book-marks is orders of magnitude more convenient, usable and useful than an e-book.

And no African would agree that music is unembodied. You show your appreciation for music you hear by joining in, physically, dancing or singing or tapping a foot or beating a hand in time. Music is actually the most embodied of the arts.

The glass bead game of mathematical economics

Over at the economics blog, A Fine Theorem, there is a post about economic modelling.

My first comment is that the poster misunderstands the axiomatic method in pure mathematics. It is not the case that “axioms are by assumption true”. Truth is a bivariant relationship between some language or symbolic expression and the world. Pure mathematicians using axiomatic methods make no assumptions about the relationship between their symbolic expressions of interest and the world. Rather they deduce consequences from the axioms, as if those axioms were true, but without assuming that they are. How do I know they do not assume their axioms to be true? Because mathematicians often work with competing, mutually-inconsistent, sets of axioms, for example when they consider both Euclidean and non-Euclidean geometries, or when looking at systems which assume the Axiom of Choice and systems which do not. Indeed, one could view parts of the meta-mathematical theory called Model Theory as being the formal and deductive exploration of multiple, competing sets of axioms.

On the question of economic modeling, the blogger presents the views of Gerard Debreu on why the abstract mathematicization of economics is something to be desired. One should also point out the very great dangers of this research program, some of which we are suffering now. The first is that people — both academic researchers and others — can become so intoxicated with the pleasures of mathematical modeling that they mistake the axioms and the models for reality itself. Arguably the widespread adoption of financial models assuming independent and normally-distributed errors was the main cause of the Global Financial Crisis of 2008, where the errors of complex derivative trades (such as credit default swaps) were neither independent nor as thin-tailed as Normal distributions are. The GFC led, inexorably, to the Great Recession we are all in now.

Secondly, considered only as a research program, this approach has serious flaws. If you were planning to construct a realistic model of human economic behaviour in all its diversity and splendour, it would be very odd to start by modeling only that one very particular, and indeed pathological, type of behaviour examplified by homo economicus, so-called rational economic man. Acting with with infinite mental processing resources and time, with perfect knowledge of the external world, with perfect knowledge of his own capabilities, his own goals, own preferences, and indeed own internal knowledge, with perfect foresight or, if not, then with perfect knowledge of a measure of uncertainty overlaid on a pre-specified sigma-algebra of events, and completely unencumbered with any concern for others, with any knowledge of history, or with any emotions, homo economicus is nowhere to be found on any omnibus to Clapham. Starting economic theory with such a creature of fiction would be like building a general theory of human personality from a study only of convicted serial killers awaiting execution, or like articulating a general theory of evolution using only a hand-book of British birds. Homo economicus is not where any reasonable researcher interested in modeling the real world would start from in creating a theory of economic man.

And, even if this starting point were not on its very face ridiculous, the fact that economic systems are complex adaptive systems should give economists great pause. Such systems are, typically, not continuously dependent on their initial conditions, meaning that a small change in input parameters can result in a large change in output values. In other words, you could have a model of economic man which was arbitrarily close to, but not identical with, homo economicus, and yet see wildly different behaviours between the two. Simply removing the assumption of infinite mental processing resources creates a very different economic actor from the assumed one, and consequently very different properties at the level of economic systems. Faced with such overwhelming non-continuity (and non-linearity), a naive person might expect economists to be humble about making predictions or giving advice to anyone living outside their models. Instead, we get an entire profession labeling those human behaviours which their models cannot explain as “irrational”.

My anger at The Great Wen of mathematical economics arises because of the immorality this discipline evinces: such significant and rare mathematical skills deployed, not to help alleviate suffering or to make the world a better place (as those outside Economics might expect the discipline to aspire to), but to explore the deductive consequences of abstract formal systems, systems neither descriptive of any reality, nor even always implementable in a virtual world.

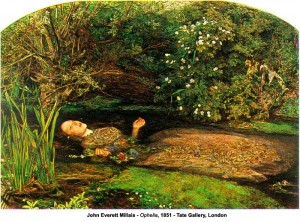

Lady Ophelia of Old Malden

News today that an amateur art-historian, Barbara Webb, has identified the location which pre-Raphaelite painter John Everett Millais used as background for his 1851 painting of the drowned Ophelia. The location is on the Hogsmill River at Old Malden in south London. It’s a long way from Elsinore.

The after-life of this image has been immense, at least in the English-speaking world. For instance, a print of the painting appears on the wall of the room rented by George Eastman, the humble protagonist of George Stevens’ 1951 movie, A Place in the Sun, a film of Theodore Dreiser’s novel, An American Tragedy. I took the presence of the print on Eastman’s wall not only as prophecy of the tragedy to come, but also as a reference to Hamlet, since Eastman, as he is played by Montgomery Clift, is undecided between his two lovers and the two very different fates which his involvement with them entails.