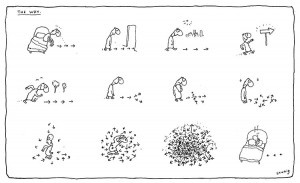

A recurring theme here has been the complexity of most important real-world decision-making, contrary to the models used in much of economics and computer science. Some relevant posts are here, here, and here. On this topic, I recently came across a wonderful cartoon by Michael Leunig, entitled “The Way” (click on the image to enlarge it):

I am very grateful to Michael Leunig for permission to reproduce this cartoon here.

Archive for the ‘Decision theory’ Category

Page 2 of 8

Julia Gillard as manager

A description of former Australian PM Julia Gillard’s parliamentary and office management style, by former staffer Nicholas Reece:

Gillard is one of the best close-quarters politicians the Federal Parliament has ever seen.

As prime minister, she ran a disciplined, professional office that operated in much the same way as a well-run law firm – a product of her early career at Slater & Gordon.

Cabinet process was strictly upheld and the massive flow of administrative and policy paperwork that moves between government departments, the prime minister’s office and the prime minister’s desk was dealt with efficiently.

There was courtesy shown to staff, MPs, public servants and stakeholders – every person entitled to a view was given a chance to express it before a decision was made.

Gillard would diligently work her way through the detail of an issue and then patiently execute an agreed plan to tackle it.

She was generous with her time and did not rush people in the way busy leaders often do. She was never rude and never raised her voice, unless for humorous purposes.

She had a quick mind and could master a brief at lightning speed. She was a masterful parliamentary tactician and a brilliant analyst of the day’s events and the politics of the Labor caucus. She was a genuinely affectionate person and had a quick wit that could be deployed to lift the spirits of those around her.

At her instigation, birthdays were the subject of office celebration. This would involve Gillard turning up for cake and delivering a very personal speech to even the most junior staff.

Significantly for a national leader, Gillard had no major personality defects. She is probably the most normal, down-to-earth person to have served as prime minister of Australia in the modern era.

In a crisis, she was supremely calm. While others wilted, Gillard had a resilience that allowed her to keep stepping up to the plate.

She was good at remembering people’s names, knowing their story, understanding their motivations and being able to see a situation from another’s perspective.

These were attributes that were very well suited to the fraught circumstances of the 43rd Parliament.

In the negotiations with the crossbench MPs to form government, Gillard easily outmanoeuvred Tony Abbott. She better understood the independents’ motivations – she focused on the detail of how the relationship between government and the crossbenches would work and committed to serving the full term.

The achievements include: the national broadband network, putting a price on carbon, education reform, children’s dental care and the national disability insurance scheme.

In federal-state relations, there was the negotiation of health reform with the conservative premiers and in foreign affairs there was a strengthening of relations with our major partners, particularly China and the US.

Against this backdrop, it is not surprising that Gillard was well-liked, even loved, among her staff, the public service and most of her caucus.”

The science of delegation

Most people, if they think about the topic at all, probably imagine computer science involves the programming of computers. But what are computers? In most cases, these are just machines of one form or another. And what is programming? Well, it is the issuing of instructions (“commands” in the programming jargon) for the machine to do something or other, or to achieve some state or other. Thus, I view Computer Science as nothing more or less than the science of delegation.

When delegating a task to another person, we are likely to be more effective (as the delegator or commander) the more we know about the skills and capabilities and curent commitments and attitudes of that person (the delegatee or commandee). So too with delegating to machines. Accordingly, a large part of theoretical computer science is concerned with exploring the properties of machines, or rather, the deductive properties of mathematical models of machines. Other parts of the discipline concern the properties of languages for commanding machines, including their meaning (their semantics) – this is programming language theory. Because the vast majority of lines of program code nowadays are written by teams of programmers, not individuals, then much of computer science – part of the branch known as software engineering – is concerned with how to best organize and manage and evaluate the work of teams of people. Because most machines are controlled by humans and act in concert for or with or to humans, then another, related branch of this science of delegation deals with the study of human-machine interactions. In both these branches, computer science reveals itself to have a side which connects directly with the human and social sciences, something not true of the other sciences often grouped with Computer Science: pure mathematics, physics, or chemistry.

And from its modern beginnings 70 years ago, computer science has been concerned with trying to automate whatever can be automated – in other words, with delegating the task of delegating. This is the branch known as Artificial Intelligence. We have intelligent machines which can command other machines, and manage and control them in the same way that humans could. But not all bilateral relationships between machines are those of commander-and-subordinate. More often, in distributed networks machines are peers of one another, intelligent and autonomous (to varying degrees). Thus, commanding is useless – persuasion is what is needed for one intelligent machine to ensure that another machine does what the first desires. And so, as one would expect in a science of delegation, computational argumentation arises as an important area of study.

Strategic Progamming

Over the last 40-odd years, a branch of Artificial Intelligence called AI Planning has developed. One way to view Planning is as automated computer programming:

- Write a program that takes as input an initial state, a final state (“a goal”), and a collection of possible atomic actions, and produces as output another computer programme comprising a combination of the actions (“a plan”) guaranteed to take us from the initial state to the final state.

A prototypical example is robot motion: Given an initial position (e.g., here), a means of locomotion (e.g., the robot can walk), and a desired end-position (e.g., over there), AI Planning seeks to empower the robot to develop a plan to walk from here to over there. If some or all the actions are non-deterministic, or if there are other possibly intervening effects in the world, then the “guaranteed” modality may be replaced by a “likely” modality.

Another way to view Planning is in contrast to Scheduling:

- Scheduling is the orderly arrangement of a collection of tasks guranteed to achieve some goal from some initial state, when we know in advance the initial state, the goal state, and the tasks.

- Planning is the identification and orderly arrangement of tasks guranteed to achieve some goal from some initial state, when we know in advance the initial state, the goal state, but we don’t yet know the tasks; we only know in advance the atomic actions from which tasks may be constructed.

Relating these ideas to my business experience, I realized that a large swathe of complex planning activities in large companies involves something at a higher level of abstraction. Henry Mintzberg called these activities “Strategic Programming”

- Strategic Programming is the identification and priorization of a finite collection of programs or plans, given an initial state, a set of desirable end-states or objectives (possibly conflicting). A program comprises an ordered collection of tasks, and these tasks and their ordering we may or may not know in advance.

Examples abound in complex business domains. You wake up one morning to find yourself the owner of a national mobile telecommunications licence, and with funds to launch a network. You have to buy the necessary equipment and deploy and connect it, in order to provide your new mobile network. Your first decision is where to provide coverage: you could aim to provide nationwide coverage, and not open your service to the public until the network has been installed and connected nationwide. This is the strategy Orange adopted when launching PCS services in mainland Britain in 1994. One downside of waiting till you’ve covered the nation before selling any service to customers is that revenues are delayed.

Another downside is that a competitor may launch service before you, and that happened to Orange: Mercury One2One (as it then was) offered service to the public in 1993, when they had only covered the area around London. The upside of that strategy for One2One was early revenues. The downside was that customers could not use their phones outside the island of coverage, essentially inside the M25 ring-road. For some customer segments, wide-area or nationwide coverage may not be very important, so an early launch may be appropriate if those customer segments are being targeted. But an early launch won’t help customers who need wider-area coverage, and – unless marketing communications are handled carefully – the early launch may position the network operator in the minds of such customers as permanently providing inadequate service. The expectations of both current target customers and customers who are not currently targets need to be explicitly managed to avoid such mis-perceptions.

In this example, the different coverage rollout strategies ended up at the same place eventually, with both networks providing nationwide coverage. But the two operators took different paths to that same end-state. How to identify, compare, prioritize, and select-between these different paths is the very stuff of marketing and business strategy, ie, of strategic programming. It is why business decision-making is often very complex and often intellectually very demanding. Let no one say (as academics are wont to do) that decision-making in business is a doddle. Everything is always more complicated than it looks from outside, and identifying and choosing-between alternative programs is among the most complex of decision-making activities.

Decision-making style

This week’s leadership-challenge-that-wasn’t in the Federal Parliamentary Caucus of the Australian Labor Party saw the likely end of Kevin Rudd’s political career. At the last moment he bottled it, having calculated that he did not have the numbers to win a vote of his caucus colleagues and so deciding not to stand. Ms Gillard was re-elected leader of the FPLP unopposed. Why Rudd failed to win caucus support is explained clearly in subsequent commentary by one of his former speech-writers, James Button:

The trick to government, Paul Keating once said, is to pick three big things and do them well. But Rudd opened a hundred policy fronts, and focused on very few of them. He centralised decision-making in his office yet could not make difficult decisions. He called climate change the greatest moral challenge of our time, then walked away from introducing an emissions trading scheme. He set a template for governing that Labor must move beyond.

On Thursday, for the third time in three years, a large majority of Rudd’s caucus colleagues made it clear that they did not want him as leader. Yet for years Rudd seemed as if he would never be content until he returned as leader. On Friday he said that he would never again seek the leadership of the party. He must keep his word, or else the impasse will destabilise and derail the party until he leaves Parliament.

Since losing the prime ministership, Rudd never understood that for his prospects to change within the government he had to openly acknowledge, at least in part, that there were sensible reasons why Gillard and her supporters toppled him in 2010. Then, as hard as it would have been, he had to get behind Gillard, just as Bill Hayden put aside his great bitterness and got behind Bob Hawke and joined his ministry after losing the Labor leadership to him in 1983.

Yes, Rudd’s execution was murky and brutal and should have been done differently, perhaps with a delegation of senior ministers going to Rudd first to say change or go. Yes, the consequences have been catastrophic for Gillard and for the ALP. ”Blood will have blood,” as Dennis Glover, a former Gillard speechwriter who also wrote speeches for Rudd, said in a newspaper on Thursday.

But why did it happen? Why did so many Labor MPs resolve to vote against Rudd that he didn’t dare stand? Why was he thrashed in his 2012 challenge? Why have his numbers not significantly moved, despite all the government’s woes?

Because – it must be said again – Rudd was a poor prime minister. To his credit, he led the government’s brave and decisive response to the global financial crisis. His apology speech changed Australia and will be remembered for years to come. But beyond that he has few achievements, and the way he governed brought him down.

At the time of his 2012 challenge, seven ministers went public with fierce criticisms of Rudd’s governing style. When most of them made it clear they would not serve again in a Rudd cabinet, many commentators wrote this up as slander and character assassination of Rudd, or as one of those vicious but mysterious internal brawls that afflict the Labor Party from time to time. They missed the essential points: that the criticisms came from a diverse and representative set of ministers, and they had substance.

If the word of these seven ministers is not enough, consider the reporting of Rudd’s treatment of colleagues by Fairfax journalist David Marr in his 2010 Quarterly Essay, Power Trip. Or the words of Glover, who wrote last year that as a ”member of the Gang of Four Hundred or So (advisers and speechwriters) I can assure you that the chaos and frustration described by Gillard supporters during February’s failed leadership challenge rang very, very true with about 375 of us.”

Consider the reporting of Rudd’s downfall by ABC journalist Barrie Cassidy in his book, Party Thieves. Never had numbers tumbled so quickly, Cassidy wrote. ”That’s because Rudd himself drove them. His own behaviour had caused deep-seated resentment to take root.” Leaders had survived slumps before and would again. But ”Rudd was treated differently because he was different: autocratic, exclusive, disrespectful and at times flat-out abusive”. Former Labor minister Barry Cohen told Cassidy: ”If Rudd was a better bloke he would still be leader. But he pissed everybody off.”

These accounts tallied with my own observations when I worked as a speechwriter for Rudd in 2009. While my own experience of Rudd was both poor and brief, I worked with many people – 40 or more – who worked closely with him. Their accounts were always the same. While Rudd was charming to the outside world, behind closed doors he treated people with rudeness and contempt. At first I kept waiting for my colleagues to give me another side of Rudd: that he could be difficult but was at heart a good bloke. Yet apart from some conversations in which people praised his handling of the global financial crisis, no one ever did.

Since he lost power, is there any sign that Rudd has reflected on his time in office, accepted that he made mistakes, that he held deep and unaccountable grudges and treated people terribly?

Did he reflect on the rages he would fly into when people gave him advice he didn’t want, how he would put those people into what his staff called ”the freezer”, sometimes not speaking to them for months or more? Did he reflect on the way he governed in a near permanent state of crisis, how his reluctance to make decisions until the very last moment coupled with a refusal to take unwelcome advice led his government into chaos by the middle of 2010, when his obsessive focus on his health reforms left the government utterly unprepared to deal with the challenges of the emissions trading scheme, the budget, the Henry tax review and the mining tax? To date there is no sign that he has learnt from the failures of his time as prime minister.

Through his wife, Rudd is currently the richest member of the Australian Commonwealth Parliament, and perhaps the richest person ever to be an MP. He is also fluent in Mandarin Chinese and famously intelligent, although perhaps not as bright as his predecessors as Labor leader, Gough Whitlam or Doc Evatt, or former ministers, Isaac Isaacs, Ted Theodore or Barry Jones. It is possible, of course, to have a first-rate mind and a second-rate temperament. An autocratic management style – unpopular within the Labor Party at any time, as Evatt and Whitlam both learnt – is even less appropriate when the Party lacks a majority in the House, and has to rely on a permanent, floating two-up game of ad hoc negotiations with Green and Independent MPs to pass legislation.

Combining actions

How might two actions be combined? Well, depending on the actions, we may be able to do one action and then the other, or we may be able do the other and then the one, or maybe not. We call such a combination a sequence or concatenation of the two actions. In some cases, we may be able to do the two actions in parallel, both at the same time. We may have to start them simultaneously, or we may be able to start one before the other. Or, we may have to ensure they finish together, or that they jointly meet some other intermediate synchronization targets.

In some cases, we may be able to interleave them, doing part of one action, then part of the second, then part of the first again, what management consultants in telecommunications call multiplexing. For many human physical activities – such as learning to play the piano or learning to play golf – interleaving is how parallel activities are first learnt and complex motor skills acquired: first play a few bars of music on the piano with only the left hand, then the same bars with only the right, and keep practicing the hands on their own, and only after the two hands are each practiced individually do we try playing the piano with the two hands together.

Computer science, which I view as the science of delegation, knows a great deal about how actions may be combined, how they may be distributed across multiple actors, and what the meanings and consequences of these different combinations are likely to be. It is useful to have an exhaustive list of the possibilities. Let us suppose we have two actions, represented by A and B respectively. Then we may be able to do the following compound actions:

- Sequence: The execution of A followed by the execution of B, denoted A ; B

- Iterate: A executed n times, denoted A ^ n (This is sequential execution of a single action.)

- Parallelize: Both A and B are executed together, denoted A & B

- Interleave: Action A is partly executed, followed by part-execution of B, followed by continued part-execution of A, etc, denoted A || B

- Choose: Either A is executed or B is executed but not both, denoted A v B

- Combinations of the above: For example, with interleaving, only one action is ever being executed at one time. But it may be that the part-executions of A and B can overlap, so we have a combination of Parallel and Interleaved compositions of A and B.

Depending on the nature of the domain and the nature of the actions, not all of these compound actions may necessarily be possible. For instance, if action B has some pre-conditions before it can be executed, then the prior execution of A has to successfully achieve these pre-conditions in order for the sequence A ; B to be feasible.

This stuff may seem very nitty-gritty, but anyone who’s ever asked a teenager to do some task they don’t wish to do, will know all the variations in which a required task can be done after, or alongside, or intermittently with, or be replaced instead by, some other task the teen would prefer to do. Machines, it turns out, are much like recalcitrant and literal-minded teenagers when it comes to commanding them to do stuff.

Taking a view vs. maximizing expected utility

The standard or classical model in decision theory is called Maximum Expected Utility (MEU) theory, which I have excoriated here and here (and which Cosma Shalizi satirized here). Its flaws and weaknesses for real decision-making have been pointed out by critics since its inception, six decades ago. Despite this, the theory is still taught in economics classes and MBA programs as a normative model of decision-making.

A key feature of MEU is the decision-maker is required to identify ALL possible action options, and ALL consequential states of these options. He or she then reasons ACROSS these consequences by adding together the utilites of the consquential states, weighted by the likelihood that each state will occur.

However, financial and business planners do something completely contrary to this in everyday financial and business modeling. In developing a financial model for a major business decision or for a new venture, the collection of possible actions is usually infinite and the space of possible consequential states even more so. Making human sense of the possible actions and the resulting consequential states is usually a key reason for undertaking the financial modeling activity, and so cannot be an input to the modeling. Because of the explosion in the number states and in their internal complexity, business planners cannot articulate all the actions and all the states, nor even usually a subset of these beyond a mere handful.

Therefore, planners typically choose to model just 3 or 4 states – usually called cases or scenarios – with each of these combining a complex mix of (a) assumed actions, (b) assumed stakeholder responses and (c) environmental events and parameters. The assumptions and parameter values are instantiated for each case, the model run, and the outputs of the 3 or 4 cases compared with one another. The process is usually repeated with different (but close) assumptions and parameter values, to gain a sense of the sensitivity of the model outputs to those assumptions.

Often the scenarios will be labeled “Best Case”, “Worst Case”, “Base Case”, etc to identify the broad underlying principles that are used to make the relevant assumptions in each case. Actually adopting a financial model for (say) a new venture means assuming that one of these cases is close enough to current reality and its likely future development in the domain under study- ie, that one case is realistic. People in the finance world call this adoption of one case “taking a view” on the future.

Taking a view involves assuming (at least pro tem) that one trajectory (or one class of trajectories) describes the evolution of the states of some system. Such betting on the future is the complete opposite cognitive behaviour to reasoning over all the possible states before choosing an action, which the protagonists of the MEU model insist we all do. Yet the MEU model continues to be taught as a normative model for decision-making to MBA students who will spend their post-graduation life doing business planning by taking a view.

Let me count the ways

A cartoon I once saw had a manager asking his subordinate to list all the action-options available for some decision, then to list the pros and cons of each action-option and then to count them, pros and cons combined, and to choose the option with the highest combined total. Thus, if one option A had 2 pros and 1 con, and another option B had 1 pro and 5 cons, the latter would be selected. You might call this the Pointy-Haired Boss model of decision-making.

It is interesting to ask exactly why is this NOT a sensible way of deciding what to do in some situation. Most of us would say that firstly, this method ignores the direction of the arguments for and against doing something – it flattens out the positive and negative nature of (respectively) the pros and the cons. Even inserting positive and negative signs to the numbers of supporting arguments would be better: Thus, option A would have +2 and -1, giving a net value of +1, while option B would have +1 and -5, giving a net value of -4; choosing option A over B on this basis would feel better, since we taking into account the direction of support or attack.

But even this additive approach ignores the strength of the support of each pro argument, and the strength of the attack of each con argument. In other words, even the additive approach still flattens something – in this case, the importance of each pro or con. We might then consider inserting some measure of value or importance to the arguments. If they involve any uncertainty, then we might also add some measure of that in some form or other, whether quantitative (eg, probabilities) or qualitative (eg, linguistic labels).

But let us stay with the pointy-haired boss for a moment. We have 3 arguments (2 pro, 1 con) under A, and 6 arguments (1 pro, 5 con) under B. Why do we have more arguments for some options than for others? It could be because we have thought about some options more than we have others, or because, through our own direct experience or observation of the experiences of others, we know more about the likely upsides and downsides of some options. The grass is always greener on the other side of the fence, for example, because we’ve not yet been over that side of the fence to see the mud there. On our side, we can clearly see all the downsides as well as the ups. Human decision biases may also lead us to emphasize the negative sides of more familiar situations, while emphasizing the positives of less familiar situations.

A model of decision-making I have proposed before – retroflexive decision-making – understands that real managers in real organizations making important decisions don’t generally have only the one pass at deciding the possible decision-options and articulating the pros and cons of each. Rather, managers work iteratively and collectively, sharing their knowledge and experiences, drawing on wide sources, and usually seeking to build a consensus view. In addition, they almost never work in a vacuum – rather, they work within a context of legacy policies and systems, with particular corporate strengths, experiences, skills, expertise, technologies, business partners, trading relationships, regulatory environments, and even personalities, and within overall intended future corporate directions. All this context means that some decision-options will already be favoured and others disfavoured. That much is human – and corporate – nature. Consequently, decision-makers will naturally seek to strengthen the pros and the positive consequences of some options (including, but usually not only, the favoured ones), while weakening or mitigating their cons and negative consequences of those options.

None of this is wrong – in fact, it is good management. Every decision-option in any even moderately complex domain will have both pros and cons. Making work whichever option is selected will be a matter of finding ways to eliminate or mitigate the negative consequences, while at the same time seeking to strengthen the positive consequences. Those options where we know more about the pros and cons are also likely to be the options where we have the greatest leverage from experience, knowledge, and expertise to accentuate the pros and to mitigate the cons. To that extent, the Pointy-Haired Boss model is not necessarily as stupid as it may seem.

The silence of the wolves

The tenth anniversary of the second Iraq War being upon us, there is naturally lots of commentary and coal-raking. Some of this involves re-writing of history. For example, many of those who participated in the February 2003 demonstrations against the war seem to have forgotten that, in Britain, they were not able to convince a majority of MPs to vote against the House of Commons resolution supporting invasion, as Norm rightly notes. However, many then on the other side too seem to have forgotten something: that the leaders of the West – President Bush, Vice-President Cheney, Defense Secretary Rumsfeld, Prime Ministers Blair and Howard – had to be dragged by the public, kicking and screaming and much against their will, to explain their decision to invade Iraq to their own citizens.

World-wide demonstrations against the war took place on Saturday 15 February 2003. Only on that day itself did Tony Blair, in a speech in Glasgow, first present in public his arguments in favour of military action. Only on 25 February 2003 – ten days after those massive street protests – did Tony Blair finally agree to a House of Commons debate on the issue. Donald Rumsfeld was not able or not willing to provide a convincing justification to even the Foreign Minister of Germany, Joschka Fischer (“You have to make the case!”, Fischer said to Rumsfeld, in English, in the midst of a speech in German, in public, at a security conference in Munich, 9 February 2003, video here.) And most notoriously of all, the Australian Senate, for the first and only time in its (then) 102-year history passed on 5 February 2003 a censure motion against the Government and a vote of no confidence in the Prime Minister John Howard, for their failure to provide any case at all for the Government’s support of the invasion.

Yet we now know that the decision by the Bush administration to invade Iraq had most probably been made by August 2002, and the question of invasion had been the focus of intense and loud public argument in every house and pub and office in the western world for at least three months. The silence of our leaders was so noticeable that, at the time, I speculated whether there were other good reasons for that silence, beside cowardice or malfeasance (blog post of 2003-02-14). We still don’t know for certain why no decision-maker would make their case public, but I suspect now it was because the case was built on decision-making about potential events with small probabilities but with catastrophic consequences: IF Saddam Hussein acquired weapons of mass destruction AND IF he used them against the West, the results would be far worse than even 9/11. Although the probabilities of these conditions being true were judged to be very small, the consequences of them being true would be so serious that the conditions had to be precluded from happening, at all costs. This, to me, would have been a compelling argument, had it ever been made in public.

Now our press carry stories of Tony Blair saying he had “long since given up trying to persuade people it was the right decision.” For goodness sake, he hardly even tried! Here is Andrew Rawnsley writing in The Observer on 14 September 2003:

Mr Blair is being punished not because he did the wrong thing, but because he went about it the wrong way. The Prime Minister didn’t trust the British people to follow the moral argument for dealing with Saddam. This mistrust in them they now reciprocate back to him. For that, Tony Blair has only himself to blame.”

Bayesianism in science

Bayesians are so prevalent in Artificial Intelligence (and, to be honest, so strident) that it can sometimes be lonely being a Frequentist. So it is nice to see a critical review of Nate Silver’s new book on prediction from a frequentist perspective. The reviewers are Gary Marcus and Ernest Davis from New York University, and here are some paras from their review in The New Yorker:

Silver’s one misstep comes in his advocacy of an approach known as Bayesian inference. According to Silver’s excited introduction,

Bayes’ theorem is nominally a mathematical formula. But it is really much more than that. It implies that we must think differently about our ideas.

Lost until Chapter 8 is the fact that the approach Silver lobbies for is hardly an innovation; instead (as he ultimately acknowledges), it is built around a two-hundred-fifty-year-old theorem that is usually taught in the first weeks of college probability courses. More than that, as valuable as the approach is, most statisticians see it is as only a partial solution to a very large problem.

A Bayesian approach is particularly useful when predicting outcome probabilities in cases where one has strong prior knowledge of a situation. Suppose, for instance (borrowing an old example that Silver revives), that a woman in her forties goes for a mammogram and receives bad news: a “positive” mammogram. However, since not every positive result is real, what is the probability that she actually has breast cancer? To calculate this, we need to know four numbers. The fraction of women in their forties who have breast cancer is 0.014, which is about one in seventy. The fraction who do not have breast cancer is therefore 1 – 0.014 = 0.986. These fractions are known as the prior probabilities. The probability that a woman who has breast cancer will get a positive result on a mammogram is 0.75. The probability that a woman who does not have breast cancer will get a false positive on a mammogram is 0.1. These are known as the conditional probabilities. Applying Bayes’s theorem, we can conclude that, among women who get a positive result, the fraction who actually have breast cancer is (0.014 x 0.75) / ((0.014 x 0.75) + (0.986 x 0.1)) = 0.1, approximately. That is, once we have seen the test result, the chance is about ninety per cent that it is a false positive. In this instance, Bayes’s theorem is the perfect tool for the job.

This technique can be extended to all kinds of other applications. In one of the best chapters in the book, Silver gives a step-by-step description of the use of probabilistic reasoning in placing bets while playing a hand of Texas Hold ’em, taking into account the probabilities on the cards that have been dealt and that will be dealt; the information about opponents’ hands that you can glean from the bets they have placed; and your general judgment of what kind of players they are (aggressive, cautious, stupid, etc.).

But the Bayesian approach is much less helpful when there is no consensus about what the prior probabilities should be. For example, in a notorious series of experiments, Stanley Milgram showed that many people would torture a victim if they were told that it was for the good of science. Before these experiments were carried out, should these results have been assigned a low prior (because no one would suppose that they themselves would do this) or a high prior (because we know that people accept authority)? In actual practice, the method of evaluation most scientists use most of the time is a variant of a technique proposed by the statistician Ronald Fisher in the early 1900s. Roughly speaking, in this approach, a hypothesis is considered validated by data only if the data pass a test that would be failed ninety-five or ninety-nine per cent of the time if the data were generated randomly. The advantage of Fisher’s approach (which is by no means perfect) is that to some degree it sidesteps the problem of estimating priors where no sufficient advance information exists. In the vast majority of scientific papers, Fisher’s statistics (and more sophisticated statistics in that tradition) are used.

Unfortunately, Silver’s discussion of alternatives to the Bayesian approach is dismissive, incomplete, and misleading. In some cases, Silver tends to attribute successful reasoning to the use of Bayesian methods without any evidence that those particular analyses were actually performed in Bayesian fashion. For instance, he writes about Bob Voulgaris, a basketball gambler,

Bob’s money is on Bayes too. He does not literally apply Bayes’ theorem every time he makes a prediction. But his practice of testing statistical data in the context of hypotheses and beliefs derived from his basketball knowledge is very Bayesian, as is his comfort with accepting probabilistic answers to his questions.

But, judging from the description in the previous thirty pages, Voulgaris follows instinct, not fancy Bayesian math. Here, Silver seems to be using “Bayesian” not to mean the use of Bayes’s theorem but, rather, the general strategy of combining many different kinds of information.

To take another example, Silver discusses at length an important and troubling paper by John Ioannidis, “Why Most Published Research Findings Are False,” and leaves the reader with the impression that the problems that Ioannidis raises can be solved if statisticians use Bayesian approach rather than following Fisher. Silver writes:

[Fisher’s classical] methods discourage the researcher from considering the underlying context or plausibility of his hypothesis, something that the Bayesian method demands in the form of a prior probability. Thus, you will see apparently serious papers published on how toads can predict earthquakes… which apply frequentist tests to produce “statistically significant” but manifestly ridiculous findings.

But NASA’s 2011 study of toads was actually important and useful, not some “manifestly ridiculous” finding plucked from thin air. It was a thoughtful analysis of groundwater chemistry that began with a combination of naturalistic observation (a group of toads had abandoned a lake in Italy near the epicenter of an earthquake that happened a few days later) and theory (about ionospheric disturbance and water composition).

The real reason that too many published studies are false is not because lots of people are testing ridiculous things, which rarely happens in the top scientific journals; it’s because in any given year, drug companies and medical schools perform thousands of experiments. In any study, there is some small chance of a false positive; if you do a lot of experiments, you will eventually get a lot of false positive results (even putting aside self-deception, biases toward reporting positive results, and outright fraud)—as Silver himself actually explains two pages earlier. Switching to a Bayesian method of evaluating statistics will not fix the underlying problems; cleaning up science requires changes to the way in which scientific research is done and evaluated, not just a new formula.

It is perfectly reasonable for Silver to prefer the Bayesian approach—the field has remained split for nearly a century, with each side having its own arguments, innovations, and work-arounds—but the case for preferring Bayes to Fisher is far weaker than Silver lets on, and there is no reason whatsoever to think that a Bayesian approach is a “think differently” revolution. “The Signal and the Noise” is a terrific book, with much to admire. But it will take a lot more than Bayes’s very useful theorem to solve the many challenges in the world of applied statistics.” [Links in original]

Also worth adding here that there is a very good reason experimental sciences adopted Frequentist approaches (what the reviewers call Fisher’s methods) in journal publications. That reason is that science is intended to be a search for objective truth using objective methods. Experiments are – or should be – replicable by anyone. How can subjective methods play any role in such an enterprise? Why should the journal Nature or any of its readers care what the prior probabilities of the experimenters were before an experiment? If these prior probabilities make a difference to the posterior (post-experiment) probabilities, then this is the insertion of a purely subjective element into something that should be objective and replicable. And if the actual numeric values of the prior probabilities don’t matter to the posterior probabilities (as some Bayesian theorems would suggest), then why does the methodology include them?